Meta implements facial recognition to combat celebrity image scams

In response to growing social media fraud, Meta is implementing new facial recognition technology. The solution is supposedly designed to protect users from fake ads using celebrities' images and simplify the process of recovering hacked accounts (so now it can be done without a selfie holding your username? 😄).

A New Weapon in the Fight Against Scammers

Scammers continuously evolve and adapt their tactics, using increasingly sophisticated technologies, including deepfakes, to create convincing advertisements. Meta responds to this challenge by introducing a system that compares faces in ads with celebrities' profile pictures on Facebook and Instagram.

How Does the New System Work?

- System automatically analyzes ads for potential fraud

- Uses facial recognition technology to compare images

- Blocks ads when unauthorized use of likeness is detected

- Biometric data is immediately deleted after comparison

Account Recovery Made Simpler

Meta is also testing facial recognition technology for identity verification when users try to recover access to their accounts. Instead of traditional document submission or "photos with profile names" (raise your hand if you've ever successfully unlocked your account this way), users will be able to record a short selfie video.

Privacy Safeguards

- All selfie videos are encrypted and securely stored

- Recordings are never publicly visible

- Biometric data is deleted immediately after verification

- Users maintain full control over the process

Geographical Limitations

The new solution will not be initially available in the European Union and the United Kingdom due to local data protection regulations. This is particularly significant in the context of recent events in Poland, where the system could find immediate application.

Users' Voice: Helplessness in Fighting Scams

Looking at Facebook and Instagram users' comments, I see a terrifying pattern of systemic ignorance. Hundreds of fake ad reports, dozens of requests to remove deepfakes, thousands of fraud reports - all dismissed with the standard formula "does not violate our community standards."

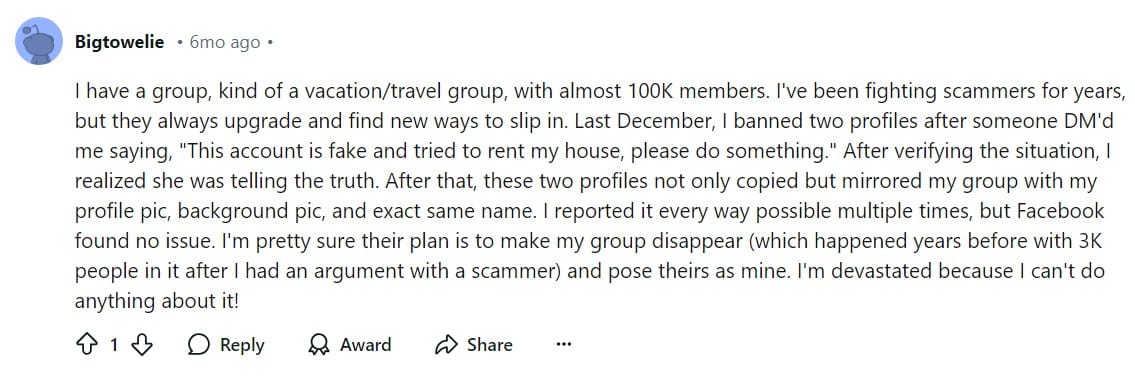

Interestingly, while obvious scams remain untouched, legitimate entrepreneurs often lose their accounts for alleged "impersonation." The absurdity of this situation is best illustrated by the case of a Facebook group administrator with almost 100,000 members - when he reported scammers, not only were they not blocked, but they created a copy of his group, using exactly the same graphics and name. Meta saw no issue with this.

Particularly moving is the helplessness of content creators who watch their image being used in dozens of fraudulent advertisements. The reporting system is so ineffective that some are forced to create fake accounts to continue reporting scams after being blocked by scammers on their main profiles.

The irony is that the only effective way to get Meta to respond seems to be the threat of a lawsuit or contact with the legal department - that's when suddenly the "impossible" becomes possible. This shows the company's true priorities - not user safety, but legal risk minimization.

The Rafał Brzoska Case

A perfect example of the urgent need for such solutions is the case of Rafał Brzoska, the Polish billionaire and founder of InPost. In 2024, Brzoska and his spouse announced a lawsuit against Meta over fake advertisements using his image on Facebook and Instagram. Despite reporting the issue in July, the company failed to effectively resolve the situation. NASK (Poland's National Research Institute) also issued warnings about this situation.

Particularly concerning is that:

- Scammers used advanced deepfake technology

- Lip-sync technique was employed to synchronize mouth movements with fake voice

- Materials were embedded in news program templates for greater credibility

- The problem affected other public figures, including President Andrzej Duda and Robert Lewandowski

The Polish Personal Data Protection Office has already responded, ordering Meta Platforms Ireland Limited to suspend the display of fake advertisements using Brzoska and his wife's images in Poland for three months.

Other Examples of Scams and Their Impact

Notable figures like Elon Musk and Martin Lewis have repeatedly fallen victim to scams using their images. Lewis admitted that he receives "countless" daily reports about unauthorized use of his image in advertisements promoting various investment schemes and cryptocurrencies.

Legal Regulations and European Commission Actions

While Meta introduces new technological solutions to combat deepfakes, significant legal actions are taking place in Europe. Particularly important is the Digital Services Act (DSA), which imposes specific content moderation obligations on digital platforms.

Proceedings Against Meta

The European Commission has initiated formal proceedings against Meta, investigating potential DSA violations in several areas:

- Insufficient control over fraudulent advertising

- Lack of effective mechanisms for reporting illegal content

- Problems with user complaint handling systems

- Inadequate moderation of political content

Interestingly, I notice that similar proceedings have affected other social media platforms - X (formerly Twitter) and TikTok. Penalties for DSA violations can reach up to 6% of the company's global annual turnover, which for Meta represents a serious motivation to act. However, whether deepfake-related revenue exceeds this 6% remains to be seen.

Grassroots Initiatives

Particularly moving is the story behind one of the most promising solutions in the fight against deepfakes. Breeze Liu, who herself fell victim to deepfake pornography in 2020, turned her trauma into action by creating the startup Alecto AI.

Liu's story shows how serious the problem of lacking effective legal and technological tools to fight such abuse is:

- Berkeley police couldn't help, despite Liu knowing the suspect

- She discovered over 800 links containing falsified materials with her image

- Bureaucratic obstacles prevented effective content removal

- After removing the first video, the perpetrator distributed more across hundreds of other sites

In response, Liu, using her experience from the cryptocurrency sector, created free facial recognition software that:

- Allows victims to quickly identify falsified content

- Cooperates with major platforms in removing illegal materials

- Gives victims control over their own images online

- Serves as a "first line of defense" against such abuse

This demonstrates the importance of grassroots initiatives in complementing the actions of tech giants and regulators. While Meta is only testing its solutions and the European Commission conducts administrative proceedings, private initiatives like Alecto AI are already offering concrete help to victims.

Problem in the System

Liu's case also reveals systemic problems in fighting deepfakes:

- Lack of specific legal regulations regarding the creation and distribution of deepfake pornography

- Insufficient preparation of law enforcement agencies

- Difficulties in coordinating actions between different platforms

- Growing availability of advanced AI tools facilitating fake content creation

The Innovation Paradox

While Meta works to increase platform security, paradoxically, some of its new solutions might potentially increase fraud risks. During Meta Connect 2024, the company presented new Meta AI capabilities with voice features, enabling natural voice conversations across all major company applications.

I can't help but make an ironic observation - while Meta fights deepfakes, it simultaneously provides advanced tools for voice and image manipulation. One might jokingly say that the platform is unconsciously moving towards a "SaaS" model - but here we're talking about "Scam-as-a-Service" rather than "Software-as-a-Service."

What Could Go Wrong?

- Scammers could use automatic dubbing features to create fake videos in multiple languages

- New lip-sync functionality might make deepfakes even more convincing

- Combining voice recognition with speech synthesis could facilitate creation of fake phone calls

This shows how thin the line is between innovation and potential threat. It might turn out that Meta will need to simultaneously develop increasingly advanced protection systems against the very abuses it unknowingly enables.

User Guidelines

Observing the situation from both technological and legal perspectives, I see several key recommendations for social media users:

- Use available mechanisms for reporting illegal content - DSA forces platforms to handle them effectively (yes, I know reporting ads on FB/Google is torture, but it leaves a trace)

- Monitor the development of defensive tools like Alecto AI

- Pay special attention to advertisements using celebrity images

- Document violations - they may be useful in legal proceedings

- Report serious violations not only to platforms but also to relevant regulatory authorities

Scale of the Problem

- Meta removed 8,000 fake advertisements since April 2024 (pity they don't share information about the number of deepfake reports)

- The problem grows with deepfake technology development

- Scammers frequently promote fake investment schemes

- Users' financial losses can be significant

Future of Facial Recognition Technology

This is Meta's first significant development in facial recognition systems in a decade. The company withdrew its previous automatic photo tagging feature in 2021 due to privacy and accuracy concerns. The current solution has been designed with maximum security and user privacy protection in mind.

The Brutal Truth About Fighting Scams

Analyzing Meta's actions, I can't shake the feeling that we're watching a carefully orchestrated PR spectacle. The company presents new facial recognition technologies as a breakthrough in fighting deepfakes, but let's face reality - Meta is not a charity organization but a profit-driven business.

Simple business calculations reveal an uncomfortable truth: revenue from advertisements, even those created by scammers, significantly outweighs potential regulatory fines. Even the mentioned 6% of annual turnover under DSA is merely a cost of doing business for Meta - a drop in the ocean of advertising profits.

Criminals understand this dynamic perfectly. They know that as long as their activity generates advertising revenue, platforms will turn a blind eye to "imperfections" in moderation systems. The cases of Rafał Brzoska and Breeze Liu aren't exceptions - they're symptoms of a much deeper, systemic problem.

So perhaps instead of more spectacular announcements of new security measures, it's time for an uncomfortable question: do we really want to entrust our security to a company that profits from our lack of security?

Member discussion